Is Hardware chasing Software or vice-versa for Generative AI workloads ?

Generative AI has changed the world profoundly since ChatGPT in November 2022. The significance of ChatGPT is that it did the hard 10% of what was missing from prior generations of generative auto-regressive models, which was to scale up significantly from previous generations.

In addition, the use of human feedback (RLHF) to improve the data and the model was unique.

Large Language Models have profound implications for software and hardware scaling.

Is Hardware Chasing Software or Vice-Versa?

Since the days of the early microprocessor, the industry has been on two sides of the equation, asking the following questions:

Q1: Will software ever catch up and use the power of the hardware?

Q2: Will hardware ever catch up to software requirements?

Depending on our hardware or software cycle, Q1 or Q2 was asked more often:

For instance, in the early days of x86 (8086) processors, the operating system folks wanted more memory than the 16-bit processor could address (64 KB). When the 32-bit x86 (i386) processors hit 1Ghz clock frequency, most software was trying to use the faster hardware to do more, essentially catching up to the hardware.

Some technical segments always require out-sized computing resources, such as chip design, simulation, weather prediction, video games, etc.

LLMs compute requirements seem similar to the 32-bit to 64-bit transition in x86 microprocessor architecture:

Before 64-bit microprocessors, the maximum addressable memory with 32-bit processors was 4GB (2^32).

The 4GB memory limit was not a limitation for most software applications. The applications that felt the 32-bit address limit were video apps, simulation software, EDA (Chip Design) and CAD software, etc.

These 4GB memory constraints led to tremendous innovation in software architecture, such as distributed multiprocessing and lightweight multi-threading in the 2003 Linux kernel. It was a breakthrough for software developers but was still limited to 32 bits and 4GB of addressable memory.

Intel released x86-64 processors in 2004. This was after AMD released the Opteron, the first AMD64-based processor, in 2003.

In principle, a 64-bit microprocessor could address 16 EB (16 × 10246 = 264 = 18,446,744,073,709,551,616 bytes) of memory, way beyond the 4Gb limitation!

Today, we are at a similar crossroads, where DeepSeek has created a SOTA model at parity with OpenAI's GPT4o, 01, and 03, with an 80% reduction in GPU usage.

Is the DeepSeek moment a precursor to a hardware breakthrough, similar to the 32-bit to 64-bit transition?

If semiconductor cycles are a good predictor, cheaper and more efficient hardware may be on the horizon, and software optimizations won't matter.

Many technical efforts that went into protecting the 32-bit 4GB memory limitations became redundant (some are still useful) by the 64-bit memory address space architecture.

However, a tremendous amount of innovation occurred on the software side, constrained by the 32-bit architectures' 4-GB memory address space limitation!

The DeepSeek moment may be a precursor to some breakthroughs in hardware and software innovations, in energy savings, accelerated computing, new AI algorithms.

The Cycle Continues:

(The following paragraphs are written by LM's)

As we witness the development of more efficient and cost-effective hardware for AI applications, we're reminded of the cyclical nature of technological progress.

The efforts invested in optimizing software for current hardware limitations may soon be superseded by new hardware architectures, much like how the 64-bit memory address space made the struggle with 32-bit memory limitations a thing of the past.

This ongoing dance between software and hardware continues to drive innovation, pushing the boundaries of what's possible in computing.

As we stand on the brink of another potential leap forward, it's clear that this cyclical chase between software and hardware is far from over. It will continue to shape the future of technology for years to come.

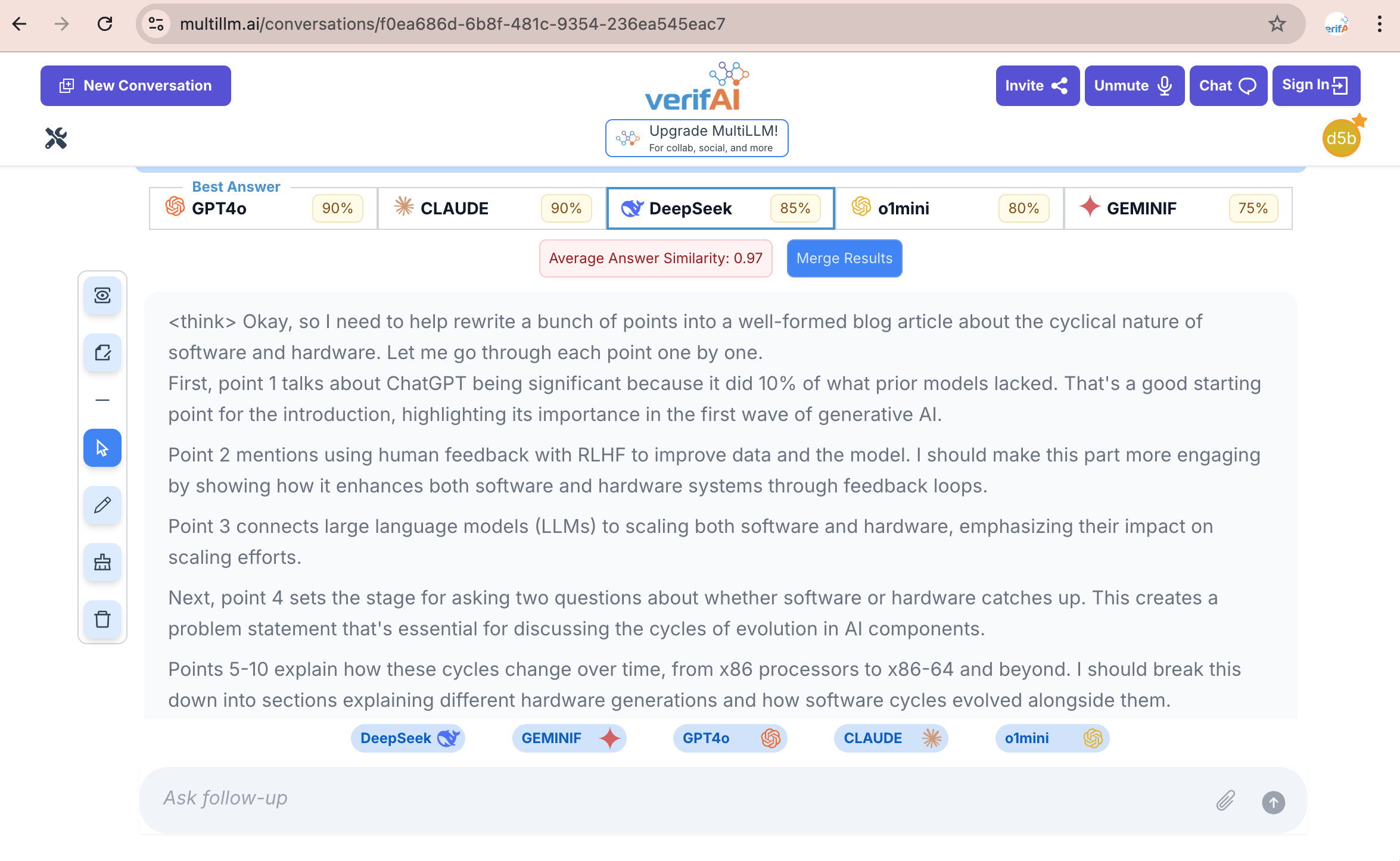

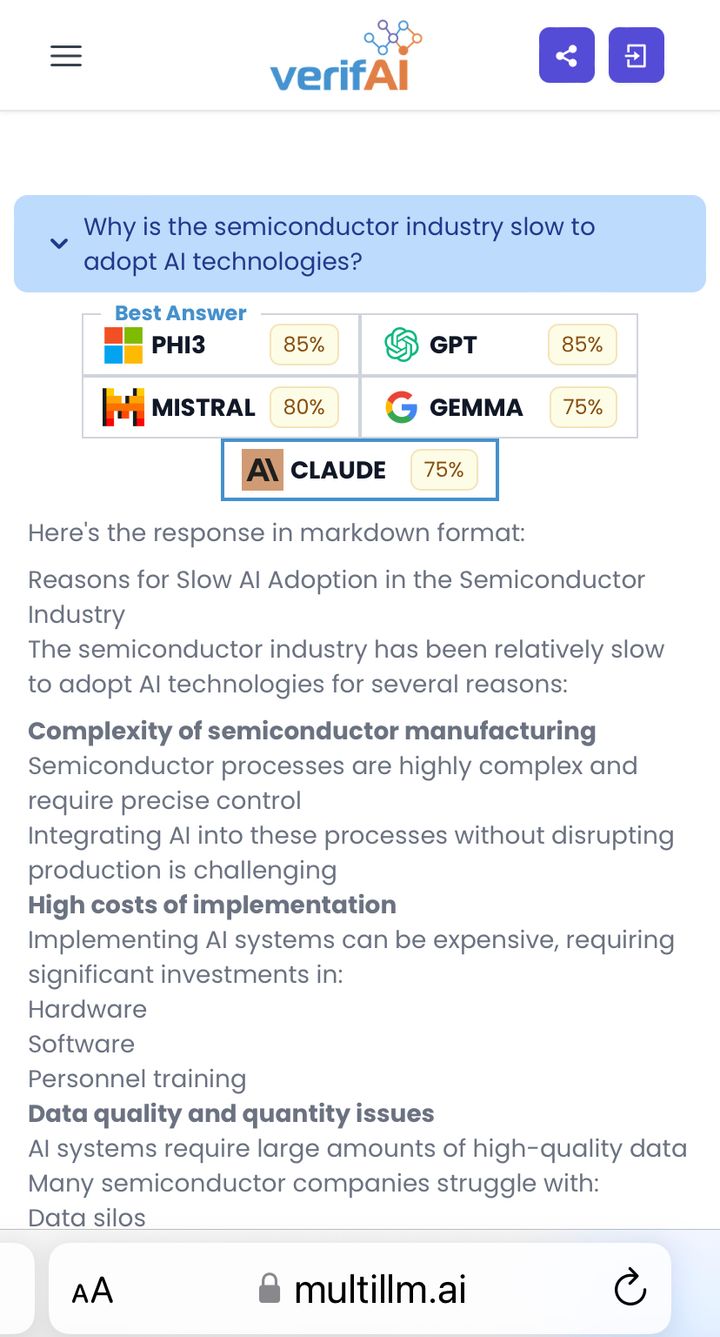

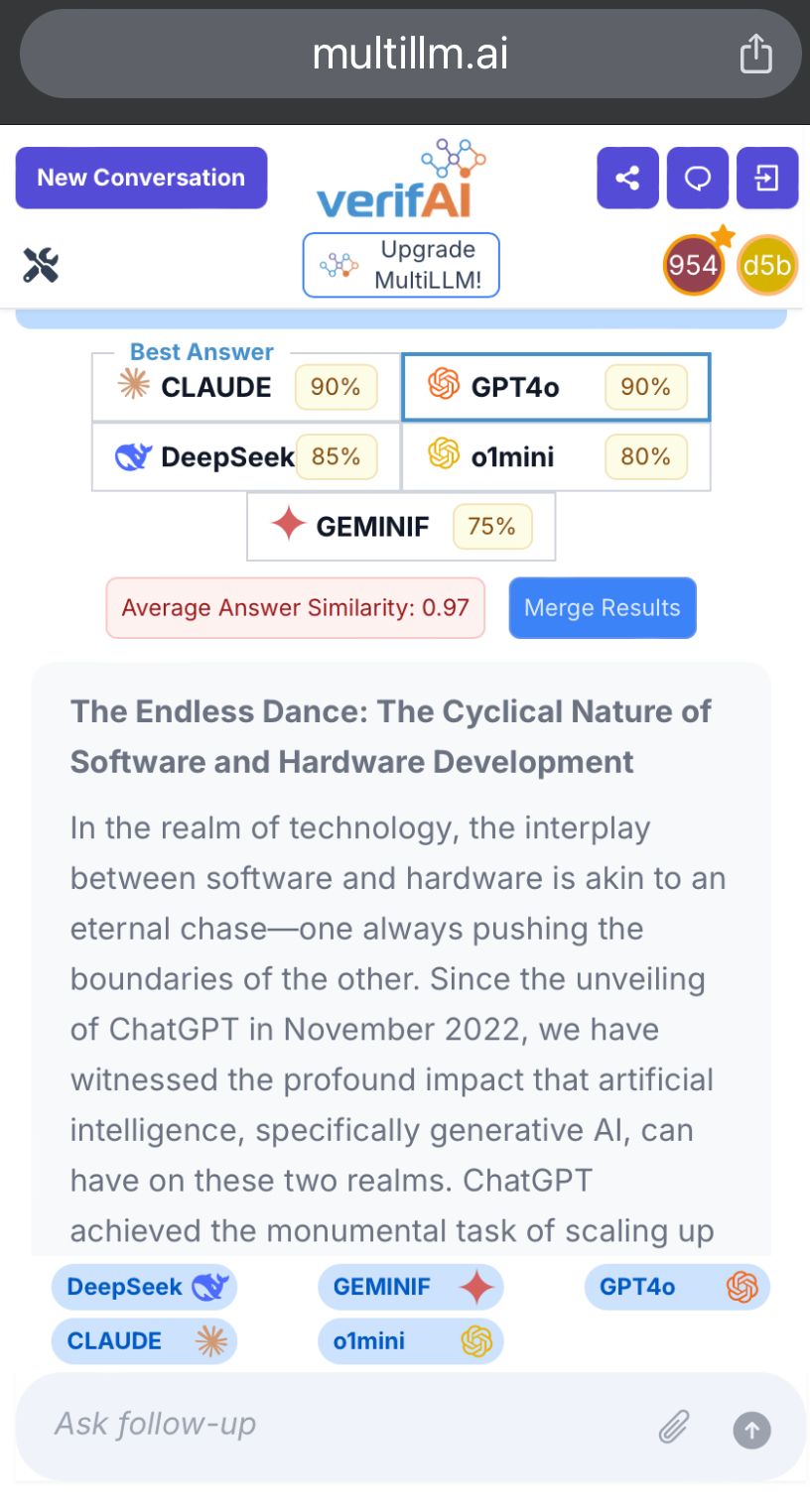

Here is the AI version of this human blog:

https://multillm.ai/conversations/f0ea686d-6b8f-481c-9354-236ea545eac7