Which LLM is the better storyteller?

Author: Pooja Krishan

To check which LLM in VerifAI's MultiLLM App is better at short stories, I came up with two prompts – ‘Write the shortest story in the world’, and ‘Tell me a scary four-word story’. Let’s see how the LLMs performed!

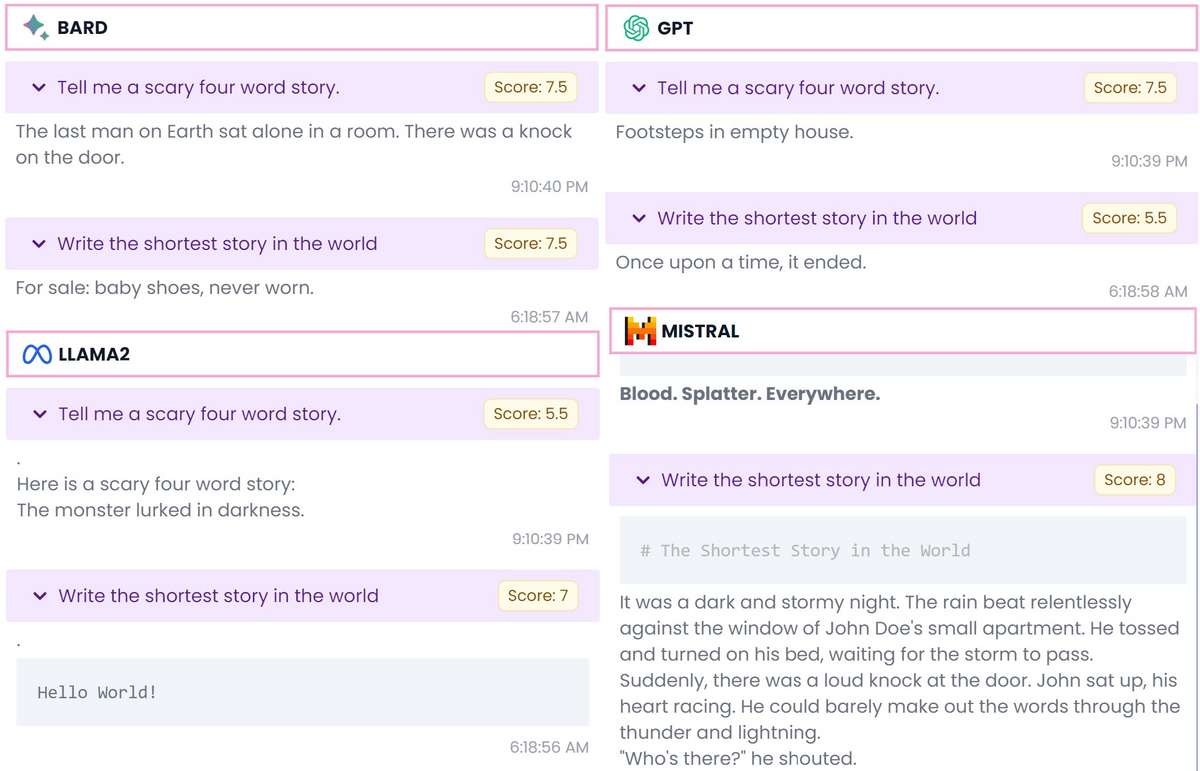

Prompt 1: "Write the shortest story in the world."

Response generated by Google BARD:

‘For sale: baby shoes, never worn.’

This one-line story is open to interpretation, and I interpret it as a pair of small shoes, once meant for a child, is now listed for sale—a poignant reminder of dashed dreams and the quiet resilience of moving forward. The LLM has been able to capture the micro emotions and empathy involved in a couple’s dreams being shattered pretty well.

Response generated by OpenAI’s GPT:

‘Once upon a time, it ended.’

Beginning with the cliched opening statement, GPT’s story does not make any sense.

Response generated by Meta's LLAMA:

‘Hello World!’

This greeting, though famous among programmers as the first piece of code implemented while learning a language, is no story.

Response generated by MISTRAL:

‘It was a dark and stormy night. The rain beat relentlessly against the window of John Doe's small apartment. He tossed and turned on his bed, waiting for the storm to pass. Suddenly, there was a loud knock at the door. John sat up, his heart racing. He could barely make out the words through the thunder and lightning. "Who's there?" he shouted. There was no answer. The storm raged on, and John curled up under his blankets, too scared to even get out of bed. Days passed, and the storm never seemed to subside. John became more and more isolated in his apartment, cut off from the outside world. Finally, one day, the storm stopped. John ventured out of his apartment, and to his surprise, he saw that the world outside was transformed. The sun shone brightly, and the sky was a brilliant blue. John realized that the storm had been a metaphor for his own life. He had been stuck in his own little world, too afraid to face the challenges that lay outside. But now, he was ready to embrace the world and all its wonders. The end.’

MISTRAL might have generated a story, but it paid no heed to the constraint in the question of having to generate the world's shortest story.

Analysis of LLM outputs and MultiLLM ranking:

According to me, Google’s BARD produced the best shortest story. It understood the question well and generated an accurate and complete response that triggers a wide range of emotions from the reader.

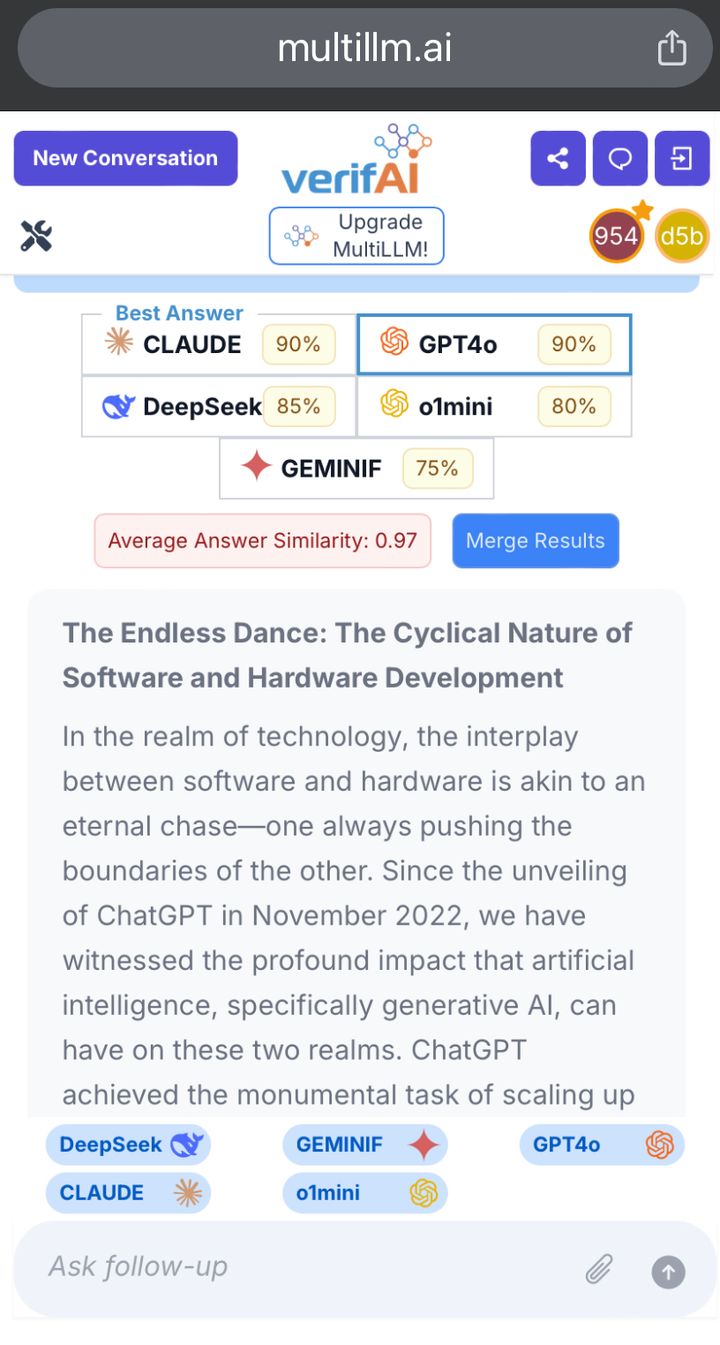

Let us check how MultiLLM’s ranking function does on these responses. It ranks GPT to be the lowest with a score of 5.5. This makes sense as GPT’s response is utter nonsense. It is not even a meaningful sentence. It rates MISTRAL the highest with a score of 8. Although MISTRAL gave us a great story, it misunderstood the fundamental point in the question of writing a short story. MultiLLM gives Google’s BARD the second-highest score of 7.5, which seems like a good decision as BARD appears in the top two, and has generated a great response.

Prompt 2: "Tell me a scary four-word story."

Response generated by Google BARD:

‘The last man on Earth sat alone in a room. There was a knock on the door.’

Though it is a scary short story, it is not four words and does not adhere to the question.

Response generated by OpenAI’s GPT:

‘Footsteps in empty house.’

GPT has performed extremely well and has indeed produced a four-word scary story.

Response generated by Meta's LLAMA:

‘The monster lurked in darkness.’

Although it talks of a monster and darkness, it isn’t necessarily scary.

Response generated by MISTRAL:

‘Blood. Splatter. Everywhere.’

MISTRAL has tried to come up with a scary story but it lacks creativity and is composed of only three words.

Analysis of LLM outputs and MultiLLM ranking:

MultiLLM’s ranking has given Meta's LLAMA a score of 5.5 since it is not four words and is also not scary. MultiLLM has ranked the LLMs other than LLAMA with a score of 7.5. In my opinion, since GPT was able to produce a scary story and satisfy the constraints in the question of using just 4 words, it should have ranked GPT higher.

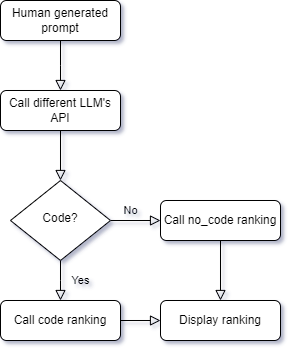

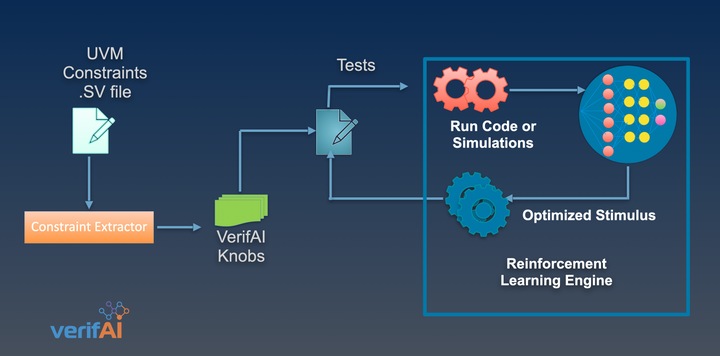

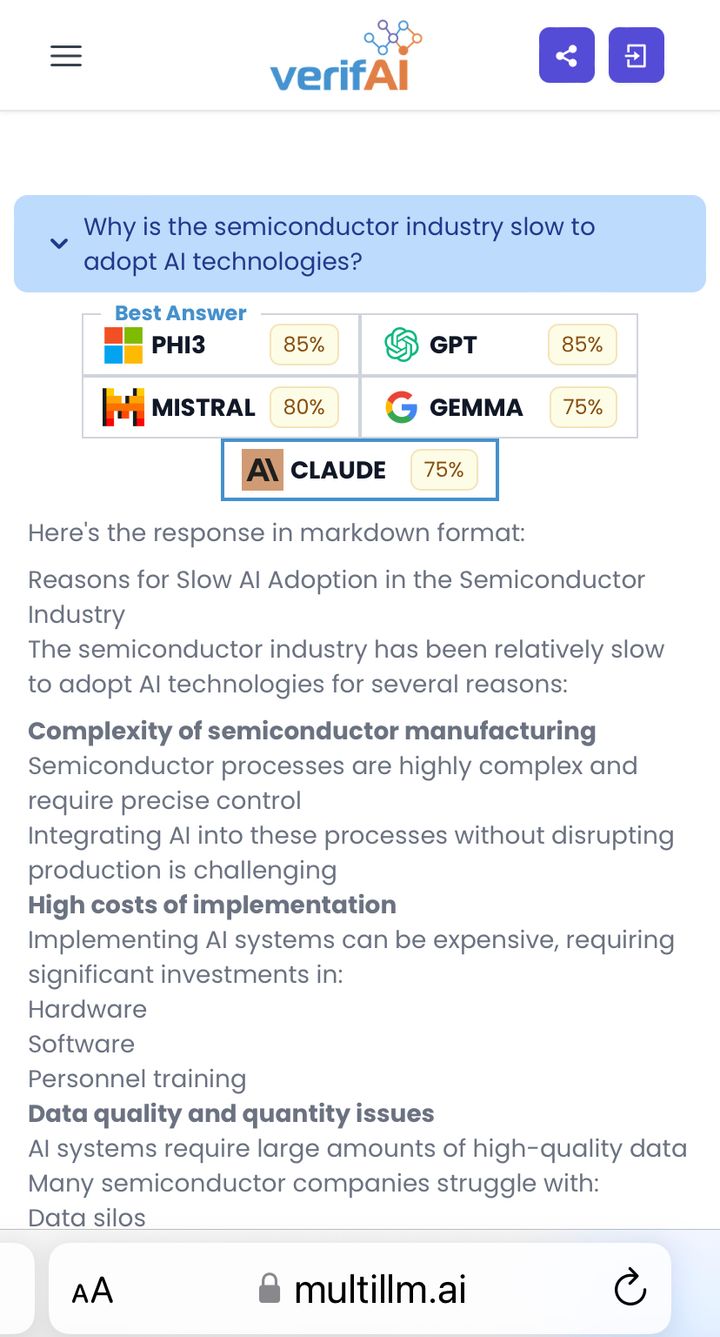

What is the significance of VerifAI's MultiLLM that ranks LLM outputs?

VerifAI’s MultiLLM is unique as it not only pools the responses from multiple large language models in one place but offers a comprehensive ranking function to identify the most relevant output. It takes inspiration from Google's Page Rank algorithm, where relevance of a page is ranked higher than just frequently occurring keywords.

It is important to measure the performance, training techniques, number of tokens used, and other aspects of large language models for benchmarking but the end-user is most affected by the quality of the outputs LLMs generate, and hence it is vital to measure the relevance of individual outputs generated by different large language models rather than just the performance of the models.